Eliminating Child Sexual Abuse Material: The Role and Impact of Hash Values

April 18, 2016

5 Minute Read

John Shehan is the Vice President of the Exploited Child Division at the National Center for Missing & Exploited Children. He also serves as Vice President to INHOPE, a network of international hotlines combatting child sexual abuse online, and as an advisory board member to the College of Humanities & Behavioral Sciences at his alma mater, Radford University. His blog post is part of Thorn’s hashing series, which highlights the benefits of hashing technology for industry, law enforcement, nonprofits, and service providers as they work to detect and remove child sexual abuse material online.

“Every day of my life I live in constant fear that someone will see my pictures and recognize me and that I will be humiliated all over again. It hurts me to know someone is looking at them — at me — when I was just a little girl being abused for the camera. I did not choose to be there, but now I am there forever in pictures that people are using to do sick things. I want it all erased. I want it all stopped. But I am powerless to stop it just like I was powerless to stop my uncle.”

This quote is part of a victim impact statement by Amy[1], which clearly portrays the long-lasting impact of child sexual exploitation. This crime is exponentially compounded when the sexual abuse is recorded via digital images/videos and shared online. Many of these child victims, just like Amy in the victim impact statement above, are now adults, living with the frustrating realization that their sexual abuse material is traded online regularly among those with a sexual interest in children.

How can we use technology to isolate child sexual abuse material online?

“I want it all erased. I want it all stopped.”

Can this be done? Does technology exist that can find a single image amongst millions of others? If such technology does exist, can it be put to scale against the billions of images stored online daily? How can child sexual abuse images be isolated online while still respecting the privacy of legitimate online users? This is a complex issue, to say the least, but as we have learned it can be done through the use of very specific technology that utilizes the power of hash values.

It started in 2006 when the Technology Coalition, a group of leaders in the Internet services sector, and the National Center for Missing & Exploited Children teamed up to help address this growing problem and an idea was born. It started out simple. NCMEC could provide hash values derived from child sexual abuse images that were previously reported to NCMEC’s CyberTipline by Electronic Service Providers.

Developing the CyberTipline to reduce illegal photos

The CyberTipline is the national mechanism for reporting suspected child sexual exploitation. ESPs could voluntarily participate and compare the NCMEC hash values against hash values of images that have already been uploaded by users to their platform. Images matching a hash value derived from child sexual abuse images could be removed by the ESP and reported to the CyberTipline. It was an exciting concept that offered a technological opportunity to reduce the proliferation of child sexual abuse material online. While exciting, everyone was cautiously optimistic as we knew this would be like looking for a very tiny needle within a very large haystack.

NCMEC and tech companies partner to implement a more robust technology with PhotoDNA

As we began to carefully walk down this path we learned of limitations with hashing technologies, like one to one image matching with MD5 and Sha-1 hashes, which meant that it would take a long time to find duplicate images. Undeterred, we presented this challenge to several technology companies and not surprisingly they stepped up. A more robust imaging-matching technology was created, called PhotoDNA. It was a game changer. Since then, PhotoDNA has become one of the most significant tools ever created in the reduction of child sexual abuse material online. Every year more and more ESPs have adopted the use of PhotoDNA, increasing its success.

The success and voluntary adoption of hash value scanning by ESPs has had a monumental positive impact in the reduction of child sexual abuse images online. In 2012, Thorn led the creation of an Industry Hash Sharing Platform, which allows ESPs to expand their hash lists of child sexual abuse images by voluntarily sharing datasets amongst each other. The platform grew in participation and was transitioned to NCMEC in 2014. Around that same time, NCMEC created a secondary platform that allows non-governmental organizations to make child sexual abuse hash values available to ESPs. Over time, the number of child sexual abuse hash values has grown, which has provided ESPs with an even greater ability to voluntarily impact this issue.

An effective solution to combat child sexual exploitation

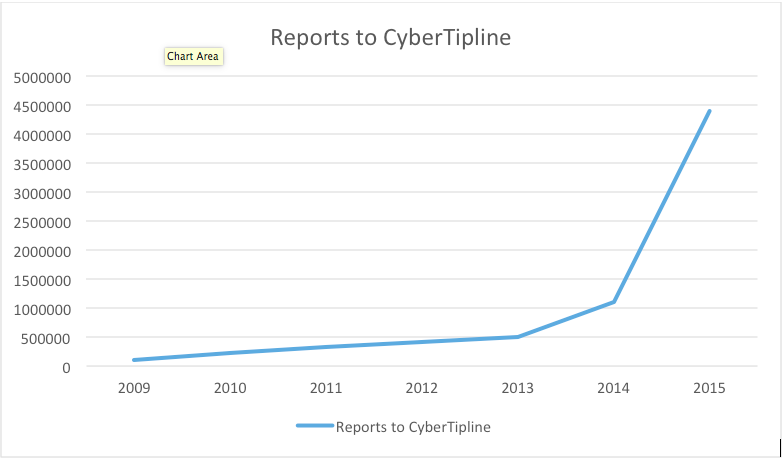

Proactive scanning for child sexual abuse images is voluntary, but the reporting aspect is not. When an ESP becomes aware of child pornography on its network, it is required by law to submit a report to NCMEC’s CyberTipline. Fast forward to 2016. The voluntary implementation of child sexual abuse hash-value scanning has continued to grow, both in the number of companies utilizing this technology and the size of the hash value list. In 2015, reports to NCMEC’s CyberTipline skyrocketed to 4.4 million, shattering previous records. Ninety four percent of these reports involved uploads of apparent child pornography from outside the United States. Because of this increased volume, NCMEC has evolved into a global clearinghouse of information. NCMEC makes CyberTipline reports available to local, state and federal law enforcement in the United States, as well as more than 100 national police forces around the world, including Europol and Interpol.

The widespread adoption of these technology tools speaks to the extremely precise nature in which technology companies can identify and remove content. This surgical-like precision takes into account the need to quickly and proactively find the heinous illegal material, while balancing the privacy concerns of legitimate Internet users.

In the 16 years that I’ve devoted my career towards child protection at NCMEC, I can confidently say that hash values are the way forward. Together, we have made a huge difference in the lives of children around the world, and I am proud to have been a part of making these tools a reality.

For more information about the National Center for Missing & Exploited Children, please visit us at www.missingkids.org.

[1] Name changed to protect the identity of the victim.

Related Articles

Stay up to date

Want Thorn articles and news delivered directly to your inbox? Subscribe to our newsletter.